America’s next top model - 2025

We’ve been witnessing AI progress at an unprecedented scale. You log off on a Friday thinking well damn, this new gpt looks fancy. It can make cute Ghibli images of me and my situationship. Wake up the next day to see that something else has popped up and the guy she told you not to worry about beat you to making the new trendy thing cause the new Claude can do that too now?

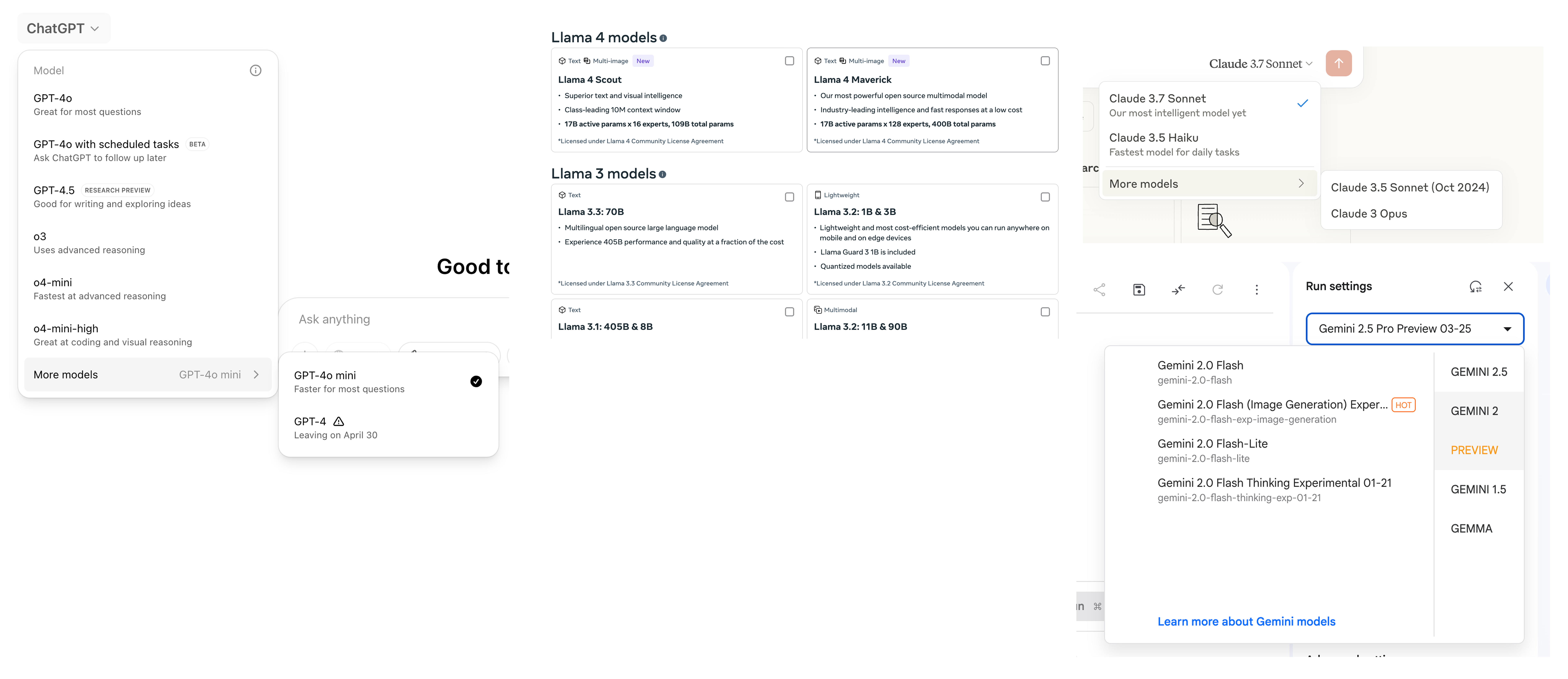

How often have you opened chatgpt and gone … why do I have more choices than Baskin Robbins? And when do I use what model?

You’re not alone. Firstly the names of all of these models kind of don’t help, be it gpt or sonnet. Secondly, They’re making models while we sleep, meaning they’re gonna keep coming.

But first off all, since we’re not a recipe (for disaster that is) here’s the dataset Download The Full Dataset Here

What’s in the Dataset?

This comprehensive collection tracks 120 foundation models from various developers, including details on:

- Model names and developers

- Access types (UI, API, Open Source status)

- Technical specifications (parameter counts, context windows)

- Performance metrics (MMLU, MATH, HumanEval, GPQA, TruthfulQA)

- Release dates and knowledge cutoff dates

- Licensing information

The dataset provides a fascinating window into the AI landscape, with models from major players like OpenAI, Google, Meta, Anthropic, as well as open-source contributors like Mistral AI, Databricks, and many research institutions.

Key Insights from the Data

The Rise of Open Source

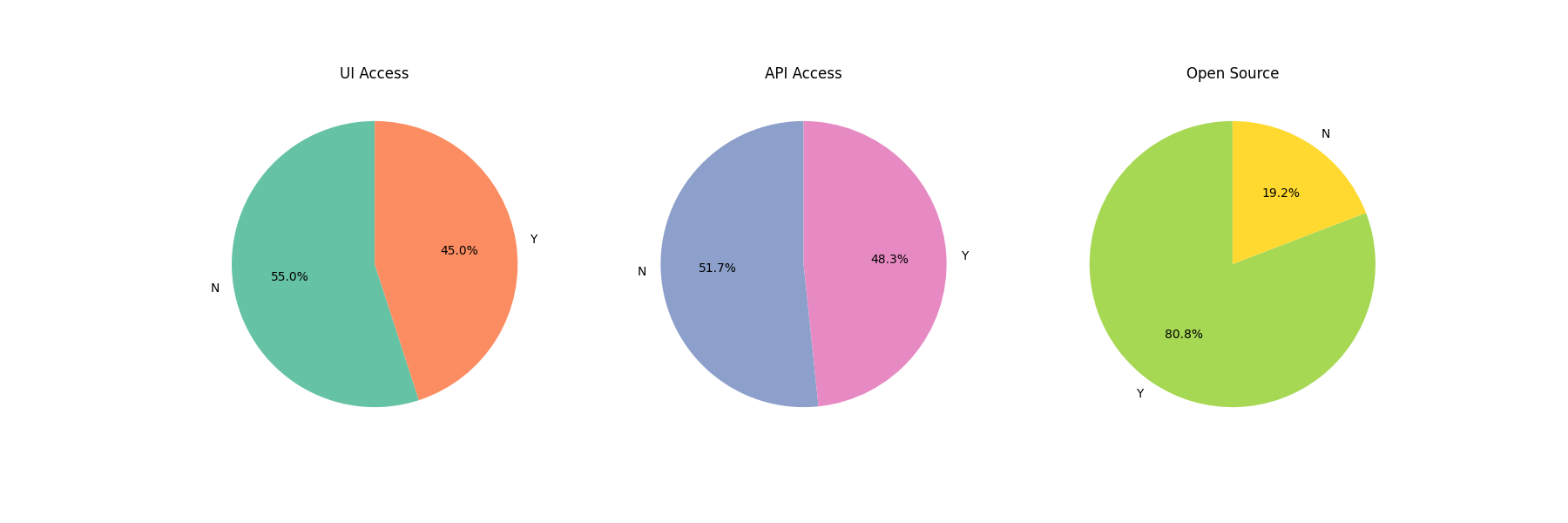

One of the most striking findings is that 80.8% of the models in the dataset are open source, compared to just 19.2% that are proprietary. This represents a massive shift in the AI ecosystem, making powerful models accessible to developers, researchers, and businesses of all sizes.

When it comes to how these models can be accessed:

- 45% offer UI access, while 55% don’t

- 48.3% provide API access, while 51.7% don’t

This suggests there’s still significant room for growth in making these models more accessible through user interfaces and APIs.

Model Release Acceleration

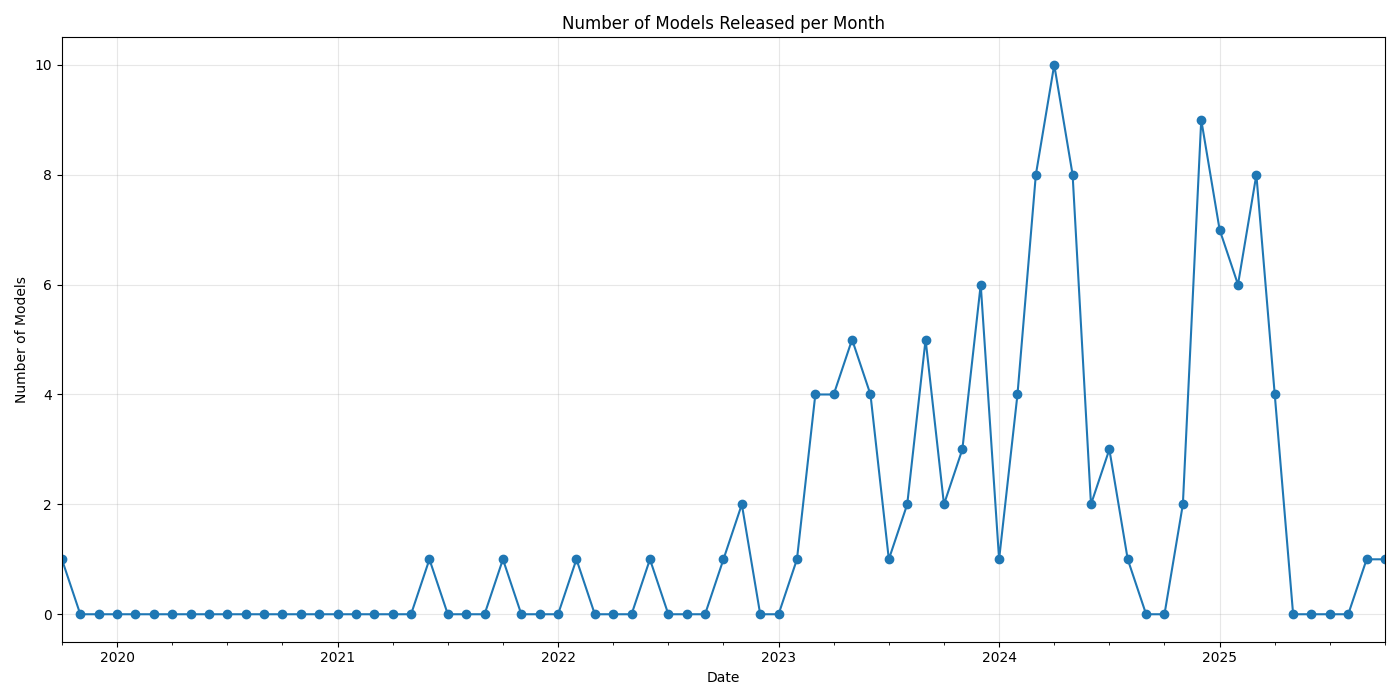

The pace of model releases has dramatically increased over the past few years. Looking at the timeline, we can see:

- Late 2022 saw the beginning of consistent releases

- 2023 marked a significant uptick with multiple models released each month

- 2024 has been explosive, with peaks of up to 10 models released in a single month

- The first quarter of 2025 has maintained this momentum

Performance Evolution

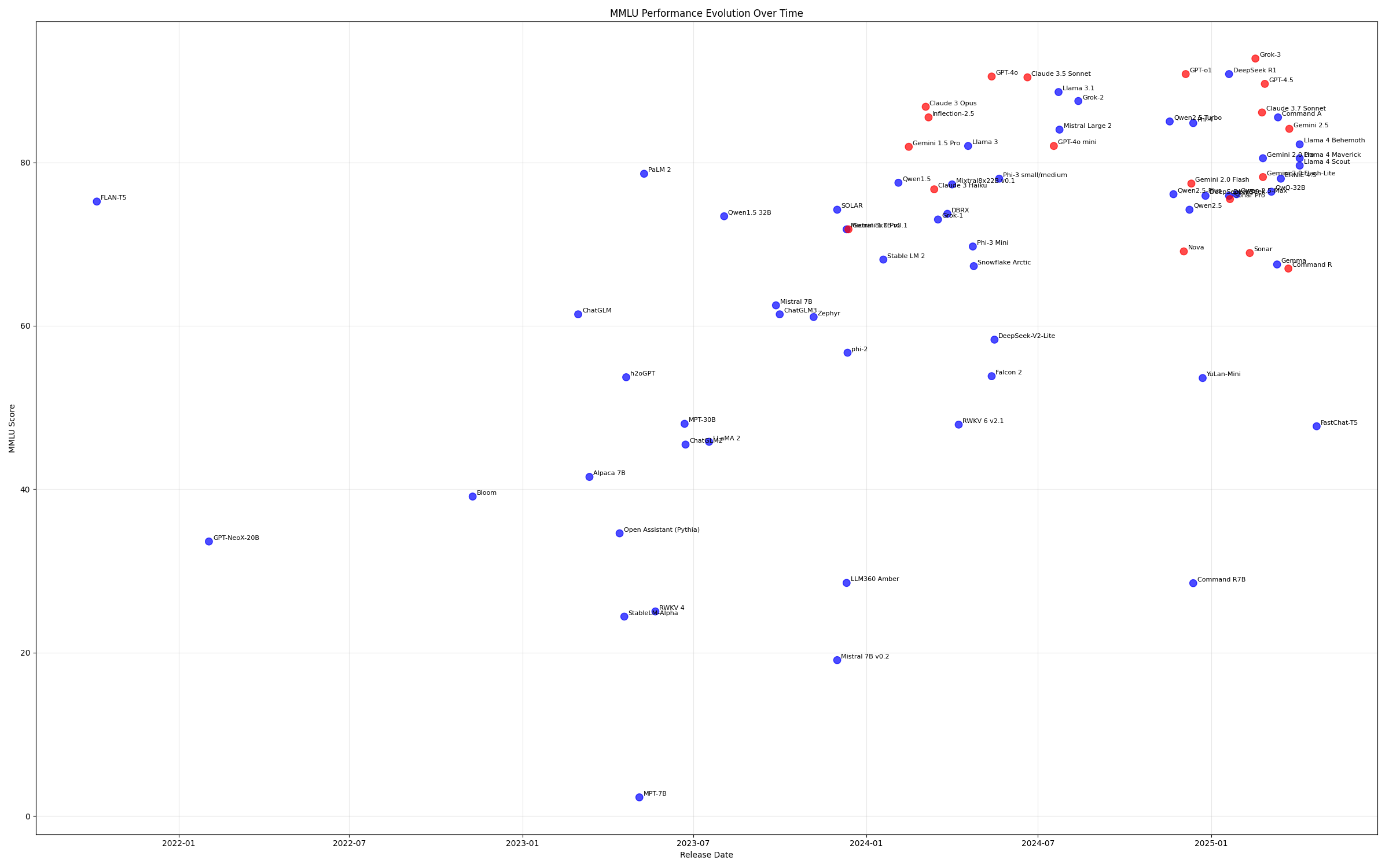

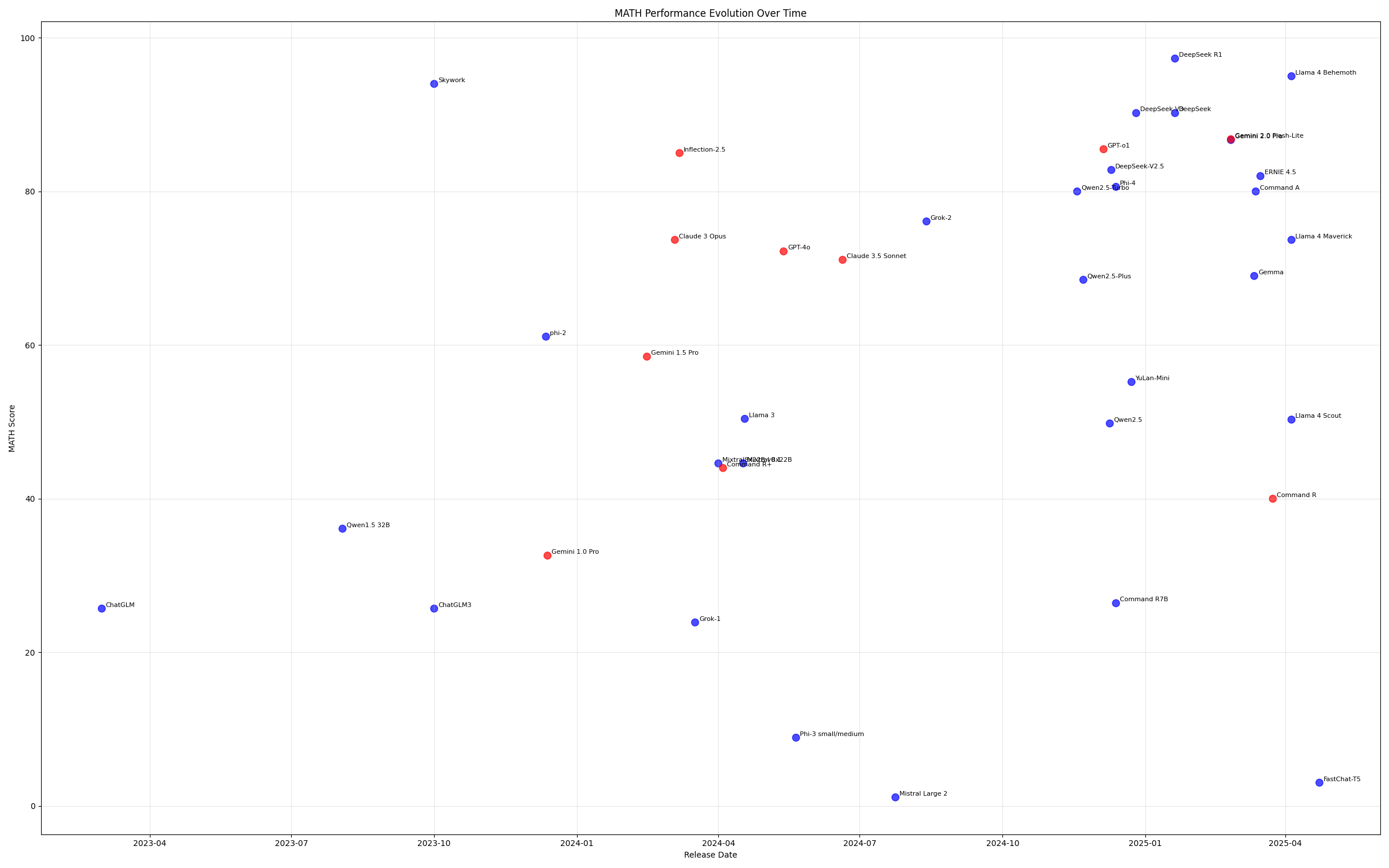

The dataset also reveals fascinating trends in model performance across key benchmarks, including MMLU (Massive Multitask Language Understanding), GPQA (Graduate-Level Google-Proof Q&A), HumanEval, MATH, and TruthfulQA. The below graphs show how scores have evolved over time, with blue dots being Open Source Models and red dots being Closed Source Models. Safe to say, the competition is fierce!

The MMLU benchmark has become the go-to test for seeing just how smart our LLMs really are. Think of it as the SAT, MCAT, and bar exam all rolled into one massive challenge: 15,908 multiple-choice questions covering a mind-boggling 57 subjects. We’re talking everything from rocket science to Renaissance art, legal reasoning to literature.

In 2025, and our models have not just caught up to humans (89.8%) but zoomed right past them. DeepSeek R1 is flexing with a 90.8% score, Claude 3.5 Sonnet is right there at 90.5%, and OpenAI’s o1 is showing off with an incredible 92.3%!

Although they are language models, our LLMs are getting good at math too! The MATH benchmark is the ultimate math test for AI models. It’s not your basic arithmetic—we’re talking algebra, geometry, and calculus problems that would give most college students nightmares.

Just a few years ago in early 2023, most AI models were basically C students in math class, scoring below 50% on these problems. Fast forward to today, and they’re absolutely acing them!

DeepSeek R1 is the current math champion with a mind-blowing 97.3% score. That’s not just passing—that’s getting almost EVERY question right!

The leaderboard is packed with other math superstars too:

- GPT-o1 killing it with 85.5%

- Gemini 2.0 Flash-Lite flexing with 86.8%

- Claude 3.5 Sonnet hitting a respectable 71.1%

Newer models like GPT-o3 and Gemini-2.5 have moved on from the MATH benchmark to others like AIME 2025, which provide more of a challege to the models, and they continue pulling scores in the 90s there too!

How to use this Dataset

For those of us working on AI applications, this dataset offers several valuable applications:

Model Selection: When choosing which models to integrate into applications and workflows, this dataset helps you make informed decisions based on performance metrics, context window sizes, and access types.

Competitive Analysis: If you’re building AI-powered tools, understanding the broader landscape helps position your solutions effectively.

Data Enrichment Opportunities: The dataset itself could be enriched with additional information scraped from the web, such as:

- Pricing information for commercial models

- Community ratings and reviews

- Real-world use cases and implementations

- Integration complexity scores

Trend Forecasting: By analyzing the release patterns and performance improvements, you can anticipate where the field is heading and plan your tech stack accordingly.

Fun Facts from the Data

- The average MMLU score across all models is 67.7%, while the average MATH score is 60.1%

- 54 models offer UI access, while 58 provide API access

- Context windows have expanded dramatically, with some models like Gemini 2.0 Flash supporting up to 2 million tokens.

- Parameter counts range from hundreds of millions to several trillion

How You Could Extend This Dataset with Gumbo

If you wanted to build on this foundation, here are some ways Gumbo could help you stay on top of every AI development:

- Automatically track model updates and new releases by scraping developer websites and GitHub repositories

- Enrich with up-to-date pricing information and usage restrictions for commercial models

- Track community engagement metrics like GitHub stars, forks, and issues

- And much more!

Get Started with the Dataset

Ready to explore this dataset for yourself? Download it and start building! Whether you’re researching the AI landscape, selecting models for your applications, or just curious about the rapid evolution of foundation models, this dataset provides a comprehensive overview of the field.

Happy data exploring, and see you next Friday with another free dataset!